Keep your Bicep code WAF aligned using DevOps Pipelines.

Azure Spring Clean 2025

Hello everyone! Welcome to this blog that I am writing for Azure Spring Clean 2025. Azure Spring Clean is a community-driven initiative focused this year on the Azure Management Umbrella these topics could include Azure Monitor, Azure Cost Management, Azure Policy, Azure Security Principles or Azure Foundations. You can check the rest of the speaker wall over here! A big shout out to Thomas Thornton and Joe Carlyle

First let me introduce myself. My name is Gert-Jan Poffers, I am an Azure Consultant and work for a very cool company Intercept in Zwolle located in the Netherlands.

In this topic I will write about how you can keep code WAF aligned and to organization standards when your branch is merged within Azure DevOps. This way you can be sure that your code complies with WAF and organizational standards when multiple teams are working on the same code.

Here we use a combination of a branch protect policy in combination with the PSRule option that checks and scans for the use of best practices on, for example, bicep code.

I would like to show you how we can use this and deploy it to keep your code WAF aligned and your environment secure.

Branch and Branch Policies

Let's start at the beginning. When you start working with a team from a repository, it is good not to develop all the code from the main branch. You want to use the main branch as a basis from which you know that the code is good, reliable and stable. It is therefore good to work with branches, when you or your teammate want to make an adjustment or improvement in the code, a new branch can be created based on the main branch. In this branch you can make any changes you want, but leave the main branch untouched.

But what if you want to integrate the changes you made with the main branch?

Simple, you can merge your branch with the main branch. But you do not want to have this merge done without any intervention from a colleague or a policy, which is why it is good to put protection on the merge process.

It is advisable to enable a protection policy on the branch, for example, to only allow merging when someone has reviewed your code or, for example, to require that all comments must first be viewed and resolved before a merge can take place.

You can also build and use a build validation step to have your code merged, so after you push your code, a Pipeline will run and uses PSRule to scan code. If it is not according to the standards, then the Pipeline will fail and the branch cannot be merged.

What is PSRule and how are we setting it up.

PSRule is a rule engine that validates your Infrastructure as Code. It will check your code based on Best Practices, Security and Compliance standards and supports Bicep, Terraform and YAML files. It have an integration with multiple platforms like Azure DevOps, Github Actions and Powershell for your code scanning.

PSRule uses multiple baselines based on best practices, by referring to those baselines you can have code validated and it returns the results in a report, you can accept but also ignore these results by building in exclusions, because your organization understand this recommendation but cannot implement it for various reasons.

We set this up in Azure DevOps and this check is done by using two YAML files that you place in the source of your repository.

| File Name | Description |

|---|---|

ps-rule.yaml | This file connected to the ps module rule checks the baseline and applies exceptions. |

| File Name | Description |

|---|---|

analyze-bicep.yaml | This yaml will trigger when merged and handles validation and flags Bicep code that fails the baseline. |

Let zoom in to the ps-rule.yaml file we are going to use:

- In the include step you need to put in the PSRule module

- In the input step pathIgnore, I have set the path where my bicepparam file is located because the content of this file doesn't need scanning.

- You can set the configuration steps which is optional, in this example I use a couple of them but it's optional. I will do some more explanation in the rest of the blog, but for all the rule explanation you can check this documentation.

1

2# Use PSRule for Azure.

3include:

4 module:

5 - PSRule.Rules.Azure

6

7output:

8 culture:

9 - "en-US"

10 banner: Minimal

11 outcome: 'None'

12

13input:

14 pathIgnore:

15 # Exclude all files.

16 - "*"

17 - '!**/*.bicepparam'

18

19

20configuration:

21 # Enable automatic expansion of Azure parameter files.

22 AZURE_PARAMETER_FILE_EXPANSION: false

23

24 # Enable automatic expansion of Azure Bicep source files.

25 AZURE_BICEP_FILE_EXPANSION: true

26

27 # Configures the number of seconds to wait for build Bicep files.

28 AZURE_BICEP_FILE_EXPANSION_TIMEOUT: 20

29

30 # Custom non-sensitive parameters' names

31 AZURE_DEPLOYMENT_NONSENSITIVE_PARAMETER_NAMES:

32 [

33

34 ]

35

36rule:

37 # Enable custom rules that don't exist in the baseline

38 includeLocal: false

39 exclude:

40 # Ignore the following rules for all resources

41

42suppression:

Let's zoom in on the analyze.bicep.yaml file.

- It will use the trigger when the branch is merged to main

- It will checkout the repo

- It will use the PSRule module.

- Uses the Azure.All baseline.

- It will save and store the output in the Sarif format.

So for the last part to save it in and output it in Sarif format you will need to do some extra's in DevOps, you will read that in the next step.

1trigger:

2 - main # Trigger on merge

3

4pool:

5 vmImage: 'ubuntu-latest'

6

7steps:

8- checkout: self # Ensure repo is checked out

9

10# Analyze Azure resources using PSRule for Azure

11- task: ps-rule-assert@2

12 displayName: Analyze Azure template files

13 inputs:

14 modules: 'PSRule.Rules.Azure'

15 baseline: Azure.All

16 outputFormat: Sarif

17 outputPath: reports/ps-rule-results.sarif

How to set this up in Azure DevOps

We need a few things to further set this up within Azure DevOps namely:

In your DevOps organization, create a repository or use an existing one.

As a second step you need to store your yaml files in the repository, I assume you know how to do this using GIT, if not click on the link.

Create your yaml files in a editor like VS Code and push it to your repository.

- The next step configure the Pipeline.

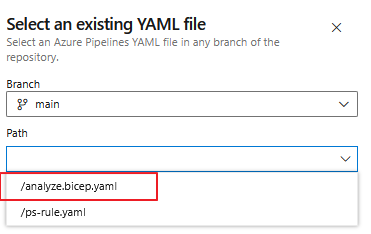

Go to pipelines and click on "new pipelines" choose "Azure Repos Git" and your repository. "Existing Azure Pipelines YAML file" is the next step and choose your analyze-bicep.yaml yaml file and click on continue. Click in the final step on "Save" and it will be stored as your Pipeline.

- We need to install the PSRule extension in the organization within Azure DevOps.

We can do that in the market place when accessing the browser.

- We need to install the Sarif extension in the organization within Azure DevOps.

We can do that in the market place when accessing the browser.

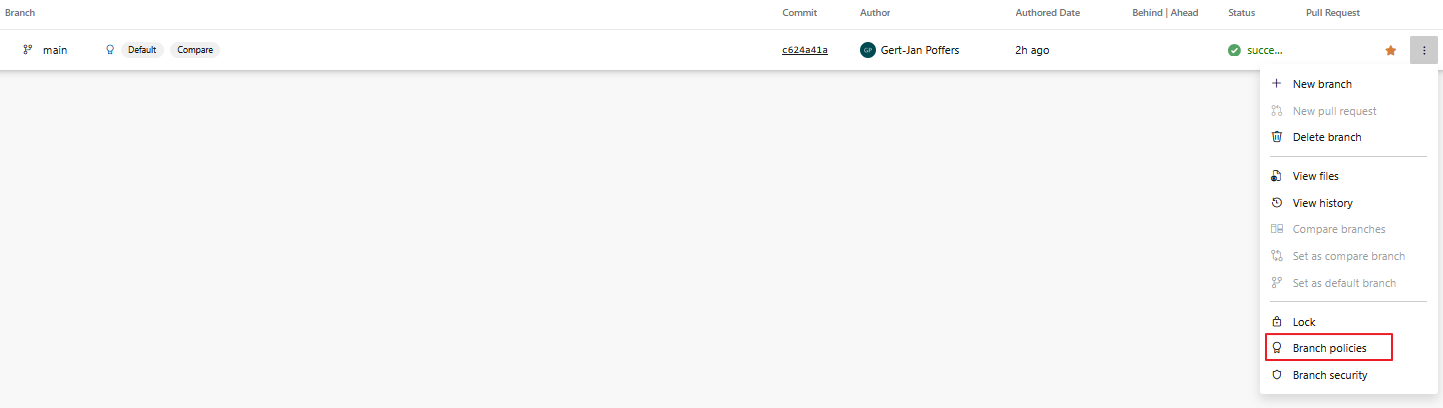

- We need to set-up branch policy on the main branch.

Go to the repository and click on branches, on the main branch click on the dots and choose branch policies

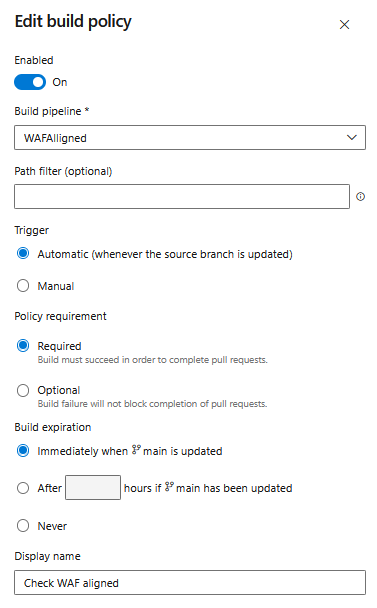

Click on the plus and fill in the necessary values, in my below example I select the the Pipeline that is created in the first step and choose "automatic" as trigger and that is a required policy, that means that the build needs to be completed to complete the pull requests. As a final step fill in the Display Name.

Cool we have installed the necessary files and configured the things we needed to set in place let's proceed!

Let's test the set-up

Let's start with a bicep file that I created, in which a storage account, a key vault and a resource group are created, I also use the Azure Verified Modules in combination with a bicepparam file, I have stored them in an infra folder.

It is good to add, that PSRule will only check the values you have added in your bicep, it is not an auto-resolve option that let you know which rules needed for it to be WAF alligned. It only checks the values and settings you have put in to the code. Therefore it is good to use the Azure Verified Modules where you have a good overview of WAF aligned bicep code.

1targetScope = 'subscription'

2

3param updatedBy string

4

5@allowed([

6 'test'

7 'dev'

8 'prod'

9 'acc'

10])

11param environmentType string

12

13param subscriptionId string

14

15@description('Unique identifier for the deployment')

16param deploymentGuid string = newGuid()

17

18@description('Product Type: example avd.')

19@allowed([

20 'psrule'

21 ])

22param productType string

23

24@description('Azure Region to deploy the resources in.')

25@allowed([

26 'westeurope'

27 'northeurope'

28

29])

30param location string = 'westeurope'

31@description('Location shortcode. Used for end of resource names.')

32param locationShortCode string

33

34@description('Add tags as required as Name:Value')

35param tags object = {

36 Environment: environmentType

37 LastUpdatedOn: utcNow('d')

38 LastDeployedBy: updatedBy

39}

40

41param resourceGroupName string = 'rg-${productType}-${environmentType}-${locationShortCode}'

42

43

44param storageAccountName string

45param storageAccountSku string

46

47//parameters for the Key Vault.

48param keyVaultName string

49

50

51@description('Admin password')

52@secure()

53param domainAdminPass string

54

55// Deploy required Resource Groups - New Resources

56module createResourceGroup 'br/public:avm/res/resources/resource-group:0.4.0' = {

57 scope: subscription(subscriptionId)

58 name: 'rg-${deploymentGuid}'

59 params: {

60 name: resourceGroupName

61 location: location

62 tags: tags

63

64

65 }

66

67 }

68

69module createStorageAccount 'br/public:avm/res/storage/storage-account:0.14.1' = {

70 scope: resourceGroup(resourceGroupName)

71 name: 'stg-${deploymentGuid}'

72 params:{

73 name: storageAccountName

74 skuName: storageAccountSku

75 fileServices: {

76 Shares: [

77 {

78 name: 'fslogix'

79 shareQuota: 20

80 }

81 ]

82

83 }

84

85

86 tags: tags

87 }

88dependsOn: [createResourceGroup]

89

90}

91

92module createKeyVault 'br/public:avm/res/key-vault/vault:0.11.2' = {

93 scope: resourceGroup(resourceGroupName)

94 name: 'kv-${deploymentGuid}'

95 params:{

96 name: keyVaultName

97 secrets: [

98

99 {

100 name: 'DomainAdminPass'

101 value: domainAdminPass

102

103 }

104

105 ]

106 enablePurgeProtection: false

107 location: location

108 tags: tags

109 }

110

111}

And this is my biccepparam file:

1using 'main.bicep'

2

3

4//parameters for the deployment.

5param updatedBy = 'yourname'

6param subscriptionId = ''

7param environmentType = 'prod'

8param location = 'westeurope'

9param locationShortCode = ''

10param productType = 'psrule'

11

12

13//Parameters for the Storage Account.

14param storageAccountName = 'st${productType}${environmentType}${locationShortCode}'

15param storageAccountSku = 'Standard_LRS'

16

17//Parameters for the Key Vault

18param keyVaultName = 'kv-psrule-${productType}-${environmentType}-${locationShortCode}'

19param domainAdminPass = 'psrulepassword'

When you have set-up your bicep files you can create in VSCode a new branch using git checkout -b testbranch. When you created the new branch you can push it to the repository, use for example: git add . && git commit -m "testing bicep files" && git push. You can also use your VSCode tools when the right extension is installed.

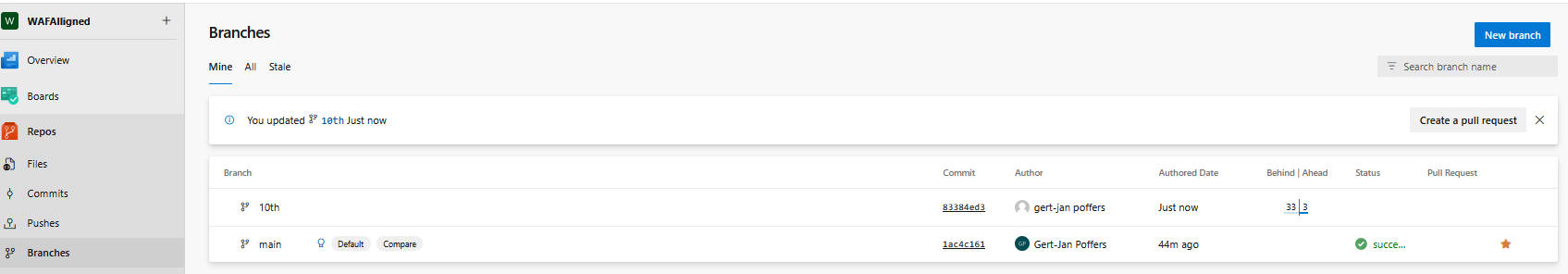

So your files are being pushed to the repository within your own branch, now go to your DevOps environment and check the repository and click on branches.

When you want to merge your branch to the main branch you can click on "create pull request", insert a title and click create. It will now do the first check and start the pipeline job, you can set the option "set auto-complete" on, this means that after a succesfull run it will auto merge and clean the branch:

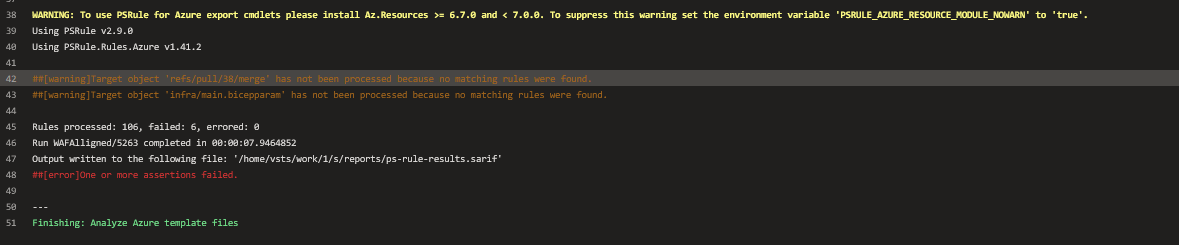

When you click on the job it is failing because it have failed results from the assertion rules.

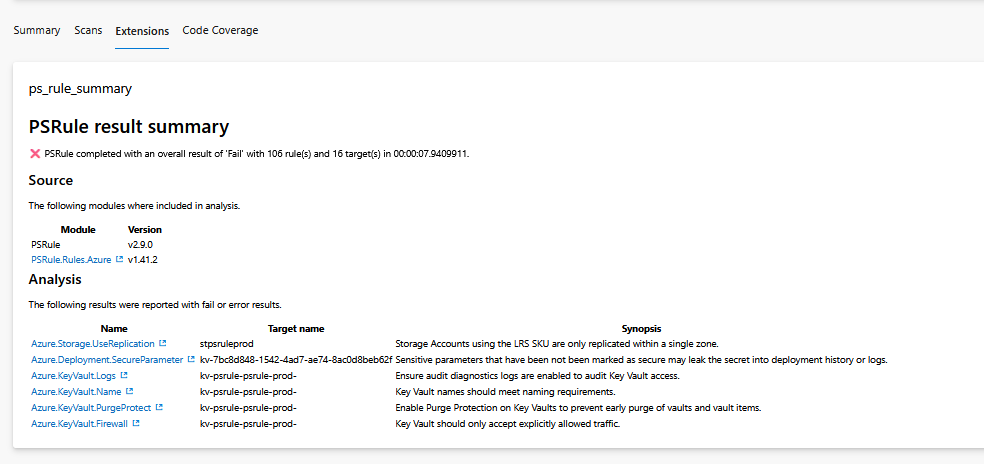

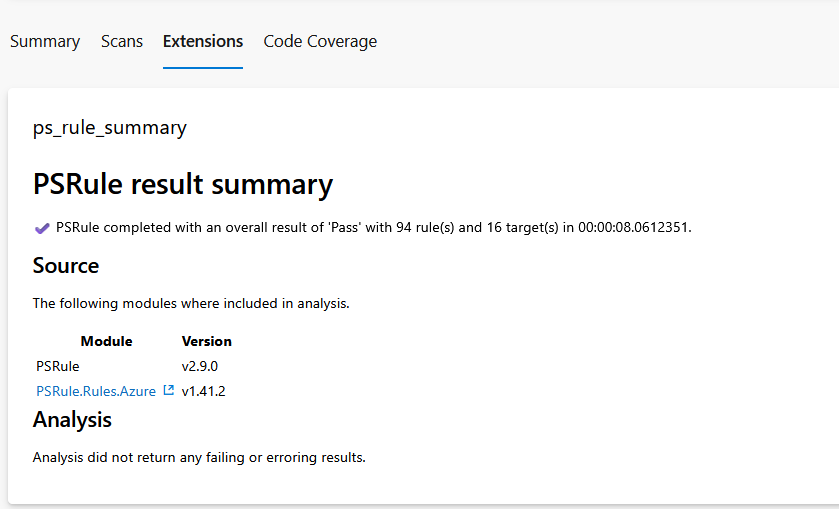

Go back to the pipeline and click on the extensions tab:

As you can see it has some bicep configurations it doesn't like, so let's fix them before we can merge them to the main branch.

How can we fix this

We can solve this in two ways: applying the baseline rules that are part of the WAF or your organization standard or applying exception rules that suppress the errors and therefore accept that it is not in accordance with the WAF standards.

It is of course not always possible to apply a complete WAF standard because, for example, the existing production environment will suffer from this, so applying exceptions is certainly not always a wrong thing to do.

One of the advantages of using PSRule check is that it allows you to look closely at your own code and make possible improvements to the final infrastructure.

Let's use both solutions to make the pipeline successfull!

If you go back to the failed pipeline and click on the extensions tab you will see the report. The above one says that the Storage Account is not replicated to another region, if you click on the link you will be redirected to the PSRule documentation.

In this documentation you will see some explanation about the recommendation and of course you can decide to not use GRS but instead want to use LRS storage, if so you need to set this module in the exception rules. But for now i will set the value on GRS in my biccepparam file.

lets run it again, by saving the file and do a git add . && git commit -m "fixing LRS to GRS storage" && git push in your VSCode:

Because you still have te branch on a merge action it will pick up your old pipeline and rerun the files to check it.

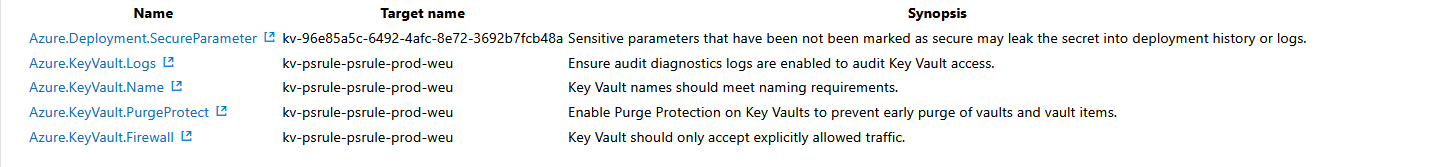

It is still failing but with another result:

Obvious, because we have changed the storage recommendation but not for the key-vault, so let me fix that:

For the Key-Vault I will change the bicep and add enablePurgeProtection: true

I will deny public acces and set the Network ACL default action on deny

For the secret i set the parameter in the "AZURE_DEPLOYMENT_NONSENSITIVE_PARAMETER_NAMES:" because I do a demo and understand the risks ;-) so i will add that in the ps-rule.yaml.

I have no logging in place so i will place module Azure.KeyVault.Logs in the exclusion rule on the ps-rule.yaml file.

Save the file and do a git add . && git commit -m "change key-vault values" && git push and check the pipeline:

Now the pipeline did its job and checked the code, so now you can merge the branch if the auto-merge wasn't set to enabled.

Conclusion

Using branch policies helps teams keep their Infrastucture as Code stable and reliable. By making changes in separate branches, developers avoid breaking the main branch and ensure that only reviewed and tested code gets merged, using this in combination with a tool like PSRule will help for sure.

PSRule adds another layer of protection by checking Infrastructure as Code against best practices and compliance rules. In Azure DevOps, it runs automatically when you have set the right yaml controls, making sure only approved code makes it through.

These combinations ensure that careful consideration is given when writing code and that compliance and reliability are carefully examined before merging can take place. This ensures that the WAF and organizational standards are maintained in the main branch, whereby a stable and compliance environment is deployed and maintained.

I hope that after reading this blog you have been triggered to test this and perhaps use it in the future. If you have any further questions about this, I will be happy to talk to you about it. Until next time.